Breadcrumb

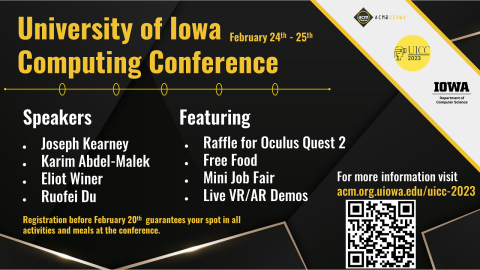

UICC 2023

Schedule

Friday, February 24, 2023

| 5:30pm | Talk 1 (Keynote): Shambaugh, Main Library Joe Kearney |

| 7:15pm | Activity + Food: (Adler Journalism Building Rotunda + AJB E120 + AJB E126) |

Saturday, February 25, 2023

| 11:00am | Talk 2: (BCSB 101) Dr. Karim Abdel-Malek |

| 12:00pm | Lunch and Job Fair: (AJB Rotunda) |

| 2:45pm | Talk 3: (BCSB 101) Dr. Eliot Winer |

| 4:00pm | Talk 4: (BCSB 101) Dr. Ruofei Du |

| 5:15pm | Closing Announcements: (BCSB 101) |

| 5:30pm | Activities + Food: (AJB E120 + AJB E126 + Rotunda) |

Speakers

Joseph Kearney

Abstract: VR Research in Three Parts

This talk will give an overview of three research initiatives that use VR technology. The first part will present a series of studies that used large-screen pedestrian and bicycling simulators to examine how two people coordinate their decisions and actions when crossing a stream of traffic. The studies reveal the powerful influence that others have on how we make consequential decisions in performing routine but potentially dangerous activities. The second part will examine a series of studies looking at how mobile technology can help pedestrians to safely cross traffic-filled roadways. The last part will present an experiment in using VR to create immersive theatrical experiences.

Bio:

Joe Kearney received a B.A. in psychology from the University of Minnesota, an M.A. in psychology from the University of Texas, and an M.S. and Ph. D. in computer science from the University of Minnesota. He is a Professor of Computer Science at the University of Iowa. He previously served as Chair of the Department of Computer Science, Director of the School of Library and Information Science, and Associate Dean and Interim Dean in the College and Liberal Arts and Sciences. Professor Kearney co-directs the Hank Virtual Environments Lab with Professor Jodie Plumert. His research focuses on how virtual environments can be used as laboratories for the study of human perception, action, and decision making with special focus on how children and adults cross traffic-filled roadways on a bike or on foot. Recent projects have included studies on how pedestrians and cyclists respond to adaptive headlights that highlight them on the edge of road, the influence of texting on pedestrian road crossing, the effectiveness of V2P technology that sends cell phone alerts and warnings to pedestrians, how two pedestrians jointly cross gaps in a stream of traffic, and distributed simulators that connect pedestrian and driving simulators in a shared virtual environment.

Karim Abdel-Malek

Abstract: Virtual Humans SANTOS and SOPHIA

A human simulator developed at the University of Iowa, aids in the reduction of load for the US Marines, tests new gear for the US Army, and helps design new vehicles for the auto manufacturing industry. SANTOS and SOPHIA are digital twins developed over the past 15 years. An integrated virtual human including biomechanics, physiology, and AI models have been developed to work inside SANTOS. The underlying formulation for predicting human motion while considering the biomechanics will be presented. Predictive Dynamics (PD) is a term coined to characterize a new methodology for predicting human motion while considering dynamics of the human and the environment. While Multi-body dynamics typically attempts to use numerical integration to determine the motion of a system of rigid bodies that has been subjected to external forces or is in motion, PD uses optimization and human performance measures to predict the motion. Physiology modeling including heat strain, work rest, extended load carriage, hydration, and many other parameters have been implemented into the model. The SANTOS platform provides a unique comprehensive system for simulating human motion, predicting what a human can do, and conducting trade-off analysis, leading to significant cost reductions. Virtual experimentation for new equipment and sending SANTOS to test new designs is at the heart of its capabilities.

Bio: Karim Abdel-Malek is internationally recognized in the area of human simulation. He serves as the Director of the Iowa Technology Institute at the University of Iowa, a world-renowned research center with 6 units and about 150 researchers. He is the creator of SANTOS®, the human digital twin and founder of 4 technology companies, including Santos Human Inc., a comprehensive virtual platform for human simulation that has been developed over the past 15 years for the US Military. Dr. Abdel-Malek has led projects with many services of the US Military and several industry partners including Ford, GM, Chrysler, Rockwell Collins Aerospace, Caterpillar, and others. His work on the development of mathematical models for predicting human motion have been extensively published and are the subject of a book entitled “Predictive Dynamics”. Dr. Abdel-Malek received his MS and PhD in robotics and simulation from the University of Pennsylvania, he has published over 250 publications and 4 books.

Eliot Winer

Abstract: Increasing the Effectiveness of Extended Reality to a Wider Range of Disciplines - Time for this Stuff to Get Useful

Extended Reality (XR) technologies are advancing at breakneck speeds and finally beginning to impact the individual’s lives in a daily manner. However, for many these technologies are either simply for games and entertainment and / or for the younger generation. In this talk, we will explore what Extended Reality (XR) is, a brief history of it, the range of disciplines it is currently being used, and what it will take for these technologies to truly become part of our everyday lives. We will also delve into uses of these technologies to train doctors, treat Post Traumatic Stress Disorders (PTSD), and aid in engineering applications.

Bio:

Eliot Winer is currently director of the VRAC (Visualize. Reason. Analyze. Collaborate.), a professor in the Departments of Mechanical Engineering (main), Electrical and Computer Engineering (courtesy), the Department of Aerospace Engineering (courtesy), and a faculty member of the Human Computer Interaction Graduate Program at Iowa State University. He received a B.S. in Aerospace Engineering from The Ohio State University in 1992 and M.S. and Ph.D. degrees in Mechanical Engineering from the University at Buffalo in 1994 and 1999. He teaches courses on mechanical systems design, optimization, and professional ethics. His research interests include large-scale collaborative design methods; analysis, visualization, and interaction with large data sets (i.e. “Big Data”); machine learning methods; multidisciplinary design analysis and optimization; computer-aided design and graphics; and extended reality (XR) for use in engineering design and manufacturing. He has had funding from a variety of sources including John Deere, the Boeing Company, the Department of the Army, Air Force Office of Scientific Research, NSF, Department of Energy, the Federal Highway Administration, the FAA, and the National Institute of Food and Agriculture. He is also a co-founder of three startup companies, the latest being BodyViz.com.

Ruofei Du

Abstract: Interactive Graphics for a Universally Accessible Metaverse

With the dramatic growth of virtual and augmented reality, ubiquitous information is created from both the virtual and the physical worlds. However, it remains a challenge how to bridge the real and the virtual worlds and how to blend the metaverse into our daily life. In this talk, I will present several interactive graphics technologies that empower the metaverse with more universal accessibility. With the consecutive works in Geollery.com and kernel foveated rendering, we present real-time pipelines of reconstructing a mirrored world and acceleration techniques. With DepthLab, Ad hoc UI, and SlurpAR, we present real-time 3D interactions with depth maps, everyday objects, and hand gestures. With Montage4D and HumanGPS, we demonstrate the great potential of digital humans in the metaverse. With CollaboVR, GazeChat, SketchyScenes, and ProtoSound, we enhance communication with mid-air sketches, gaze-aware 3D photos, and customized sound recognition. Finally, we conclude the talk with video clips of Google I/O 2022 Keynote to envision the future of a universally accessible metaverse.

Bio:

Ruofei Du is a Senior Research Scientist at Google and works on creating novel interactive technologies for virtual and augmented reality. Du's research covers a wide range of topics in VR and AR, including interactive graphics, augmented communication, mixed-reality social platforms, video-based rendering, foveated rendering, and deep learning in graphics. His research has been featured by Engadget, The Verge, PC Magazine, VOA News, cnBeta, etc. Du serves as an Associate Editor for IEEE Transactions on Circuits and Systems for Video Technology and Frontiers in Virtual Reality. He also served as a committee member in CHI 2021-2023, UIST 2022, and SIGGRAPH Asia 2020 XR. He holds 3 US patents and has published over 30 peer-reviewed publications in top venues of HCI, Computer Graphics, and Computer Vision, including CHI, SIGGRAPH Asia, UIST, TVCG, CVPR, ICCV, ECCV, ISMAR, VR, and I3D. Du holds a Ph.D. and an M.S. in Computer Science from University of Maryland, College Park; and a B.S. from ACM Honored Class, Shanghai Jiao Tong University. Website: https://duruofei.com